Note:

The chapter describes functions for image processing and analysis.

Most of the functions work with 2d arrays of pixels. We refer the arrays

as "images" however they do not necessarily have to be IplImage’s, they may

be CvMat’s or CvMatND’s as well.

Calculates first, second, third or mixed image derivatives using extended Sobel operator

void cvSobel( const CvArr* src, CvArr* dst, int xorder, int yorder, int aperture_size=3 );

aperture_size=1 3x1 or 1x3 kernel is used (Gaussian smoothing is not done).

There is also special value CV_SCHARR (=-1) that corresponds to 3x3 Scharr filter that may

give more accurate results than 3x3 Sobel. Scharr aperture is:

| -3 0 3| |-10 0 10| | -3 0 3|for x-derivative or transposed for y-derivative.

The function cvSobel calculates the image derivative by convolving the image

with the appropriate kernel:

dst(x,y) = dxorder+yordersrc/dxxorder•dyyorder |(x,y)The Sobel operators combine Gaussian smoothing and differentiation so the result is more or less robust to the noise. Most often, the function is called with (xorder=1, yorder=0, aperture_size=3) or (xorder=0, yorder=1, aperture_size=3) to calculate first x- or y- image derivative. The first case corresponds to

|-1 0 1| |-2 0 2| |-1 0 1|

kernel and the second one corresponds to

|-1 -2 -1| | 0 0 0| | 1 2 1| or | 1 2 1| | 0 0 0| |-1 -2 -1|kernel, depending on the image origin (

origin field of IplImage structure).

No scaling is done, so the destination image usually has larger by absolute value numbers than

the source image. To avoid overflow, the function requires 16-bit destination image if

the source image is 8-bit. The result can be converted back to 8-bit using cvConvertScale or

cvConvertScaleAbs functions. Besides 8-bit images the function

can process 32-bit floating-point images.

Both source and destination must be single-channel images of equal size or ROI size.

Calculates Laplacian of the image

void cvLaplace( const CvArr* src, CvArr* dst, int aperture_size=3 );

The function cvLaplace calculates Laplacian of the source image by summing

second x- and y- derivatives calculated using Sobel operator:

dst(x,y) = d2src/dx2 + d2src/dy2

Specifying aperture_size=1 gives the fastest variant that is equal to

convolving the image with the following kernel:

|0 1 0| |1 -4 1| |0 1 0|

Similar to cvSobel function, no scaling is done and the same combinations of input and output formats are supported.

Implements Canny algorithm for edge detection

void cvCanny( const CvArr* image, CvArr* edges, double threshold1,

double threshold2, int aperture_size=3 );

The function cvCanny finds the edges on the input image image and marks them in the

output image edges using the Canny algorithm. The smallest of threshold1 and

threshold2 is used for edge linking, the largest - to find initial segments of strong edges.

Calculates feature map for corner detection

void cvPreCornerDetect( const CvArr* image, CvArr* corners, int aperture_size=3 );

The function cvPreCornerDetect calculates the function

Dx2Dyy+Dy2Dxx - 2DxDyDxy

where D? denotes one of the first image derivatives and D?? denotes a second image

derivative. The corners can be found as local maximums of the function:

// assume that the image is floating-point IplImage* corners = cvCloneImage(image); IplImage* dilated_corners = cvCloneImage(image); IplImage* corner_mask = cvCreateImage( cvGetSize(image), 8, 1 ); cvPreCornerDetect( image, corners, 3 ); cvDilate( corners, dilated_corners, 0, 1 ); cvSubS( corners, dilated_corners, corners ); cvCmpS( corners, 0, corner_mask, CV_CMP_GE ); cvReleaseImage( &corners ); cvReleaseImage( &dilated_corners );

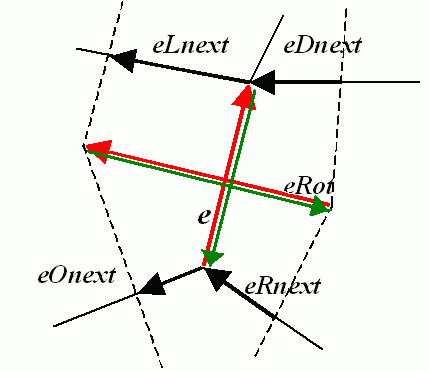

Calculates eigenvalues and eigenvectors of image blocks for corner detection

void cvCornerEigenValsAndVecs( const CvArr* image, CvArr* eigenvv,

int block_size, int aperture_size=3 );

For every pixel The function cvCornerEigenValsAndVecs considers

block_size × block_size neighborhood S(p). It calculates

covariation matrix of derivatives over the neighborhood as:

| sumS(p)(dI/dx)2 sumS(p)(dI/dx•dI/dy)|

M = | |

| sumS(p)(dI/dx•dI/dy) sumS(p)(dI/dy)2 |

After that it finds eigenvectors and eigenvalues of the matrix and stores

them into destination image in form

(λ1, λ2, x1, y1, x2, y2),

where

λ1, λ2 - eigenvalues of M; not sorted

(x1, y1) - eigenvector corresponding to λ1

(x2, y2) - eigenvector corresponding to λ2

Calculates minimal eigenvalue of gradient matrices for corner detection

void cvCornerMinEigenVal( const CvArr* image, CvArr* eigenval, int block_size, int aperture_size=3 );

image

The function cvCornerMinEigenVal is similar to cvCornerEigenValsAndVecs but

it calculates and stores only the minimal eigenvalue of derivative covariation matrix for every pixel,

i.e. min(λ1, λ2) in terms of the previous function.

Harris edge detector

void cvCornerHarris( const CvArr* image, CvArr* harris_dst,

int block_size, int aperture_size=3, double k=0.04 );

image

The function cvCornerHarris finds feature points (corners) in the image

using Harris' method. Similarly to

cvCornerMinEigenVal and

cvCornerEigenValsAndVecs,

for each pixel it calculates 2x2 gradient covariation matrix

M over block_size×block_size neighborhood.

Then, it stores

det(M) - k*trace(M)2to the corresponding pixel of the destination image. The corners can be found as local maxima in the destination image.

Refines corner locations

void cvFindCornerSubPix( const CvArr* image, CvPoint2D32f* corners,

int count, CvSize win, CvSize zero_zone,

CvTermCriteria criteria );

win=(5,5) then

5*2+1 × 5*2+1 = 11 × 11 search window is used.

criteria may specify either of or both the maximum

number of iteration and the required accuracy.

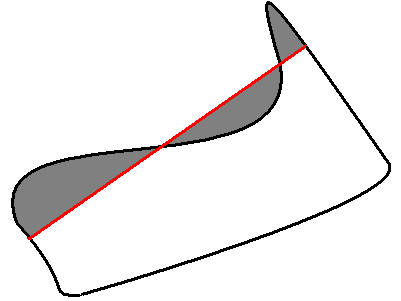

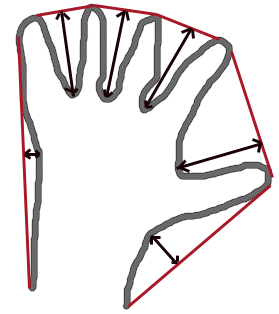

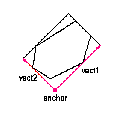

The function cvFindCornerSubPix iterates to find the sub-pixel accurate location

of corners, or radial saddle points, as shown in on the picture below.

Sub-pixel accurate corner locator is based on the observation that every vector

from the center q to a point p located within a neighborhood of q is orthogonal

to the image gradient at p subject to image and measurement noise. Consider the expression:

εi=DIpiT•(q-pi)where

DIpi is the image gradient

at the one of the points pi in a neighborhood of q.

The value of q is to be found such that εi is minimized.

A system of equations may be set up with εi' set to zero:

sumi(DIpi•DIpiT)•q - sumi(DIpi•DIpiT•pi) = 0

where the gradients are summed within a neighborhood ("search window") of q.

Calling the first gradient term G and the second gradient term b gives:

q=G-1•b

The algorithm sets the center of the neighborhood window at this new center q

and then iterates until the center keeps within a set threshold.

Determines strong corners on image

void cvGoodFeaturesToTrack( const CvArr* image, CvArr* eig_image, CvArr* temp_image,

CvPoint2D32f* corners, int* corner_count,

double quality_level, double min_distance,

const CvArr* mask=NULL, int block_size=3,

int use_harris=0, double k=0.04 );

image.

eig_image.

use_harris≠0

The function cvGoodFeaturesToTrack finds corners with big eigenvalues in the

image. The function first calculates the minimal eigenvalue for every source image pixel

using cvCornerMinEigenVal function and stores them in eig_image.

Then it performs non-maxima suppression (only local maxima in 3x3 neighborhood remain).

The next step is rejecting the corners with the

minimal eigenvalue less than quality_level•max(eig_image(x,y)). Finally,

the function ensures that all the corners found are distanced enough one from

another by considering the corners (the most strongest corners are considered first)

and checking that the distance between the newly considered feature and the features considered earlier

is larger than min_distance. So, the function removes the features than are too close

to the stronger features.

Extracts Speeded Up Robust Features from image

void cvExtractSURF( const CvArr* image, const CvArr* mask,

CvSeq** keypoints, CvSeq** descriptors,

CvMemStorage* storage, CvSURFParams params );

CvSURFPoint structures:

typedef struct CvSURFPoint

{

CvPoint2D32f pt; // position of the feature within the image

int laplacian; // -1, 0 or +1. sign of the laplacian at the point.

// can be used to speedup feature comparison

// (normally features with laplacians of different signs can not match)

int size; // size of the feature

float dir; // orientation of the feature: 0..360 degrees

float hessian; // value of the hessian (can be used to approximately estimate the feature strengths;

// see also params.hessianThreshold)

}

CvSURFPoint;

params.extended value, each element of the sequence

will be either 64-element or 128-element floating-point (CV_32F) vector.

If the parameter is NULL, the descriptors are not computed.

CvSURFParams:

typedef struct CvSURFParams

{

int extended; // 0 means basic descriptors (64 elements each),

// 1 means extended descriptors (128 elements each)

double hessianThreshold; // only features with keypoint.hessian larger than that are extracted.

// good default value is ~300-500 (can depend on the average

// local contrast and sharpness of the image).

// user can further filter out some features based on their hessian values

// and other characteristics

int nOctaves; // the number of octaves to be used for extraction.

// With each next octave the feature size is doubled (3 by default)

int nOctaveLayers; // The number of layers within each octave (4 by default)

}

CvSURFParams;

CvSURFParams cvSURFParams(double hessianThreshold, int extended=0); // returns default parameters

The function cvExtractSURF finds robust features in the image, as described in

[Bay06]. For each feature it returns its location, size, orientation

and optionally the descriptor, basic or extended. The function can be used for object tracking

and localization, image stitching etc. See find_obj.cpp demo in OpenCV samples directory.

Reads raster line to buffer

int cvSampleLine( const CvArr* image, CvPoint pt1, CvPoint pt2,

void* buffer, int connectivity=8 );

pt2.x-pt1.x|+1, |pt2.y-pt1.y|+1 ) points in case

of 8-connected line and |pt2.x-pt1.x|+|pt2.y-pt1.y|+1 in case

of 4-connected line.

The function cvSampleLine implements a particular case of application of line

iterators. The function reads all the image points lying on the line between pt1

and pt2, including the ending points, and stores them into the buffer.

Retrieves pixel rectangle from image with sub-pixel accuracy

void cvGetRectSubPix( const CvArr* src, CvArr* dst, CvPoint2D32f center );

The function cvGetRectSubPix extracts pixels from src:

dst(x, y) = src(x + center.x - (width(dst)-1)*0.5, y + center.y - (height(dst)-1)*0.5)

where the values of pixels at non-integer coordinates are retrieved using bilinear interpolation. Every channel of multiple-channel images is processed independently. Whereas the rectangle center must be inside the image, the whole rectangle may be partially occluded. In this case, the replication border mode is used to get pixel values beyond the image boundaries.

Retrieves pixel quadrangle from image with sub-pixel accuracy

void cvGetQuadrangleSubPix( const CvArr* src, CvArr* dst, const CvMat* map_matrix );

A|b] (see the discussion).

The function cvGetQuadrangleSubPix extracts pixels from src at sub-pixel accuracy

and stores them to dst as follows:

dst(x, y)= src( A11x'+A12y'+b1, A21x'+A22y'+b2), whereAandbare taken frommap_matrix| A11 A12 b1 | map_matrix = | | | A21 A22 b2 |, x'=x-(width(dst)-1)*0.5, y'=y-(height(dst)-1)*0.5

where the values of pixels at non-integer coordinates A•(x,y)T+b are retrieved using bilinear interpolation. When the function needs pixels outside of the image, it uses replication border mode to reconstruct the values. Every channel of multiple-channel images is processed independently.

Resizes image

void cvResize( const CvArr* src, CvArr* dst, int interpolation=CV_INTER_LINEAR );

CV_INTER_NN method.

The function cvResize resizes image src (or its ROI)

so that it fits exactly to dst (or its ROI).

Applies affine transformation to the image

void cvWarpAffine( const CvArr* src, CvArr* dst, const CvMat* map_matrix,

int flags=CV_INTER_LINEAR+CV_WARP_FILL_OUTLIERS,

CvScalar fillval=cvScalarAll(0) );

fillval.

matrix is inverse transform from destination image

to source and, thus, can be used directly for pixel interpolation. Otherwise,

the function finds the inverse transform from map_matrix.

The function cvWarpAffine transforms source image using the specified

matrix:

dst(x’,y’)<-src(x,y) (x’,y’)T=map_matrix•(x,y,1)T+b if CV_WARP_INVERSE_MAP is not set, (x, y)T=map_matrix•(x’,y&apos,1)T+b otherwise

The function is similar to cvGetQuadrangleSubPix but they are not exactly the same. cvWarpAffine requires input and output image have the same data type, has larger overhead (so it is not quite suitable for small images) and can leave part of destination image unchanged. While cvGetQuadrangleSubPix may extract quadrangles from 8-bit images into floating-point buffer, has smaller overhead and always changes the whole destination image content.

To transform a sparse set of points, use cvTransform function from cxcore.

Calculates affine transform from 3 corresponding points

CvMat* cvGetAffineTransform( const CvPoint2D32f* src, const CvPoint2D32f* dst,

CvMat* map_matrix );

The function cvGetAffineTransform calculates the

matrix of an affine transform such that:

(x'i,y'i)T=map_matrix•(xi,yi,1)T

where dst(i)=(x'i,y'i), src(i)=(xi,yi), i=0..2.

Calculates affine matrix of 2d rotation

CvMat* cv2DRotationMatrix( CvPoint2D32f center, double angle,

double scale, CvMat* map_matrix );

The function cv2DRotationMatrix calculates matrix:

[ α β | (1-α)*center.x - β*center.y ] [ -β α | β*center.x + (1-α)*center.y ] where α=scale*cos(angle), β=scale*sin(angle)

The transformation maps the rotation center to itself. If this is not the purpose, the shift should be adjusted.

Applies perspective transformation to the image

void cvWarpPerspective( const CvArr* src, CvArr* dst, const CvMat* map_matrix,

int flags=CV_INTER_LINEAR+CV_WARP_FILL_OUTLIERS,

CvScalar fillval=cvScalarAll(0) );

fillval.

matrix is inverse transform from destination image

to source and, thus, can be used directly for pixel interpolation. Otherwise,

the function finds the inverse transform from map_matrix.

The function cvWarpPerspective transforms source image using

the specified matrix:

dst(x’,y’)<-src(x,y) (t•x’,t•y’,t)T=map_matrix•(x,y,1)T+b if CV_WARP_INVERSE_MAP is not set, (t•x, t•y, t)T=map_matrix•(x’,y&apos,1)T+b otherwise

For a sparse set of points use cvPerspectiveTransform function from cxcore.

Calculates perspective transform from 4 corresponding points

CvMat* cvGetPerspectiveTransform( const CvPoint2D32f* src, const CvPoint2D32f* dst,

CvMat* map_matrix );

#define cvWarpPerspectiveQMatrix cvGetPerspectiveTransform

The function cvGetPerspectiveTransform calculates

matrix of perspective transform such that:

(ti•x'i,ti•y'i,ti)T=map_matrix•(xi,yi,1)T

where dst(i)=(x'i,y'i), src(i)=(xi,yi), i=0..3.

Applies generic geometrical transformation to the image

void cvRemap( const CvArr* src, CvArr* dst,

const CvArr* mapx, const CvArr* mapy,

int flags=CV_INTER_LINEAR+CV_WARP_FILL_OUTLIERS,

CvScalar fillval=cvScalarAll(0) );

fillval.

The function cvRemap transforms source image using

the specified map:

dst(x,y)<-src(mapx(x,y),mapy(x,y))

Similar to other geometrical transformations, some interpolation method (specified by user) is used to extract pixels with non-integer coordinates.

Remaps image to log-polar space

void cvLogPolar( const CvArr* src, CvArr* dst,

CvPoint2D32f center, double M,

int flags=CV_INTER_LINEAR+CV_WARP_FILL_OUTLIERS );

matrix is inverse transform from destination image

to source and, thus, can be used directly for pixel interpolation. Otherwise,

the function finds the inverse transform from map_matrix.

The function cvLogPolar transforms source image using

the following transformation:

Forward transformation (CV_WARP_INVERSE_MAPis not set): dst(phi,rho)<-src(x,y) Inverse transformation (CV_WARP_INVERSE_MAPis set): dst(x,y)<-src(phi,rho), where rho=M*log(sqrt(x2+y2)) phi=atan(y/x)

The function emulates the human "foveal" vision and can be used for fast scale and rotation-invariant template matching, for object tracking etc.

#include <cv.h>

#include <highgui.h>

int main(int argc, char** argv)

{

IplImage* src;

if( argc == 2 && (src=cvLoadImage(argv[1],1) != 0 )

{

IplImage* dst = cvCreateImage( cvSize(256,256), 8, 3 );

IplImage* src2 = cvCreateImage( cvGetSize(src), 8, 3 );

cvLogPolar( src, dst, cvPoint2D32f(src->width/2,src->height/2), 40, CV_INTER_LINEAR+CV_WARP_FILL_OUTLIERS );

cvLogPolar( dst, src2, cvPoint2D32f(src->width/2,src->height/2), 40, CV_INTER_LINEAR+CV_WARP_FILL_OUTLIERS+CV_WARP_INVERSE_MAP );

cvNamedWindow( "log-polar", 1 );

cvShowImage( "log-polar", dst );

cvNamedWindow( "inverse log-polar", 1 );

cvShowImage( "inverse log-polar", src2 );

cvWaitKey();

}

return 0;

}

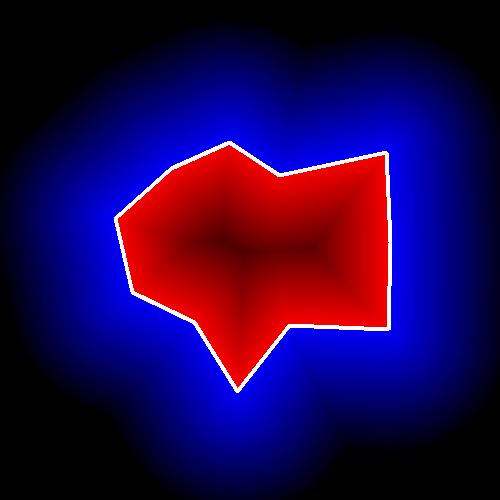

And this is what the program displays when opencv/samples/c/fruits.jpg is passed to it

Creates structuring element

IplConvKernel* cvCreateStructuringElementEx( int cols, int rows, int anchor_x, int anchor_y,

int shape, int* values=NULL );

CV_SHAPE_RECT, a rectangular element;

CV_SHAPE_CROSS, a cross-shaped element;

CV_SHAPE_ELLIPSE, an elliptic element;

CV_SHAPE_CUSTOM, a user-defined element. In this case the parameter values

specifies the mask, that is, which neighbors of the pixel must be considered.

NULL, then all values are considered

non-zero, that is, the element is of a rectangular shape. This parameter is

considered only if the shape is CV_SHAPE_CUSTOM .

The function cv CreateStructuringElementEx allocates and fills the structure

IplConvKernel, which can be used as a structuring element in the morphological

operations.

Deletes structuring element

void cvReleaseStructuringElement( IplConvKernel** element );

The function cvReleaseStructuringElement releases the structure IplConvKernel

that is no longer needed. If *element is NULL, the function has no effect.

Erodes image by using arbitrary structuring element

void cvErode( const CvArr* src, CvArr* dst, IplConvKernel* element=NULL, int iterations=1 );

NULL, a 3×3 rectangular

structuring element is used.

The function cvErode erodes the source image using the specified structuring element

that determines the shape of a pixel neighborhood over which the minimum is taken:

dst=erode(src,element): dst(x,y)=min((x',y') in element))src(x+x',y+y')

The function supports the in-place mode. Erosion can be applied several (iterations)

times. In case of color image each channel is processed independently.

Dilates image by using arbitrary structuring element

void cvDilate( const CvArr* src, CvArr* dst, IplConvKernel* element=NULL, int iterations=1 );

NULL, a 3×3 rectangular

structuring element is used.

The function cvDilate dilates the source image using the specified structuring element

that determines the shape of a pixel neighborhood over which the maximum is taken:

dst=dilate(src,element): dst(x,y)=max((x',y') in element))src(x+x',y+y')

The function supports the in-place mode. Dilation can be applied several (iterations)

times. In case of color image each channel is processed independently.

Performs advanced morphological transformations

void cvMorphologyEx( const CvArr* src, CvArr* dst, CvArr* temp,

IplConvKernel* element, int operation, int iterations=1 );

CV_MOP_OPEN - openingCV_MOP_CLOSE - closingCV_MOP_GRADIENT - morphological gradientCV_MOP_TOPHAT - "top hat"CV_MOP_BLACKHAT - "black hat"

The function cvMorphologyEx can perform advanced morphological

transformations using erosion and dilation as basic operations.

Opening: dst=open(src,element)=dilate(erode(src,element),element) Closing: dst=close(src,element)=erode(dilate(src,element),element) Morphological gradient: dst=morph_grad(src,element)=dilate(src,element)-erode(src,element) "Top hat": dst=tophat(src,element)=src-open(src,element) "Black hat": dst=blackhat(src,element)=close(src,element)-src

The temporary image temp is required for morphological gradient and, in case of in-place

operation, for "top hat" and "black hat".

Smoothes the image in one of several ways

void cvSmooth( const CvArr* src, CvArr* dst,

int smoothtype=CV_GAUSSIAN,

int size1=3, int size2=0, double sigma1=0, double sigma2=0 );

size1×size2 neighborhood of the pixel.

If the neighborhood size varies from pixel to pixel, compute the sums

using integral image (cvIntegral).

size1×size2 neighborhood of the pixel.

size1×size2.

sigma1 and sigma2 may optionally be

used to specify shape of the kernel.

size1×size1 (i.e. only square aperture can be used).

That is, for each pixel the result is the median computed

over size1×size1 neighborhood.

sigma1 and

spatial sigma=sigma2. If size1!=0, then

a circular kernel with diameter size1 is used;

otherwise the diameter of the kernel is computed from sigma2.

Information about bilateral filtering

can be found at

http://www.dai.ed.ac.uk/CVonline/LOCAL_COPIES/MANDUCHI1/Bilateral_Filtering.html

size2 is zero, it is set to size1.

When not 0, it should be odd too.

In case of Gaussian kernel this parameter may specify Gaussian sigma (standard deviation).

If it is zero, it is calculated from the kernel size:

sigma = (n/2 - 1)*0.3 + 0.8, where n=param1 for horizontal kernel,

n=param2 for vertical kernel.

With the standard sigma for small kernels (3×3 to 7×7) the performance is better.

If param3 is not zero, while param1 and param2

are zeros, the kernel size is calculated from the sigma (to provide accurate enough operation).

In case of Bilateral filter the parameter specifies color sigma; the larger the value, the stronger the pasterization effect of the filter is.

In case of non-square Gaussian kernel the parameter may be used to specify a different

(from param3) sigma in the vertical direction.

In case of Bilateral filter the parameter specifies spatial sigma; the larger the value,

the stronger the blurring effect of the filter. Note that with large sigma2

the processing speed decreases substantionally, so it is recommended to limit the kernel

size using the parameter size1.

The function cvSmooth smoothes image using one of the pre-defined methods. Every of the methods

has some features and restrictions listed below:

Applies linear filter to image

void cvFilter2D( const CvArr* src, CvArr* dst,

const CvMat* kernel,

CvPoint anchor=cvPoint(-1,-1));

The function cvFilter2D applies the specified linear filter to the image.

In-place operation is supported. When the aperture is partially outside the image,

the function interpolates outlier pixel values from the nearest pixels at the

image boundary. If ROI is set in the input

image, cvFilter2D treats it, similarly to many other OpenCV functions, as if

it were an isolated image, i.e. pixels inside the image but outside of the ROI

are ignored. If it is undesirable, consider new C++ filtering classes declared

in cv.hpp.

Copies image and makes border around it

void cvCopyMakeBorder( const CvArr* src, CvArr* dst, CvPoint offset,

int bordertype, CvScalar value=cvScalarAll(0) );

IPL_BORDER_CONSTANT -

border is filled with the fixed value, passed as last parameter of the function.IPL_BORDER_REPLICATE -

the pixels from the top and bottom rows, the left-most and right-most columns are replicated

to fill the border.IPL_BORDER_REFLECT and IPL_BORDER_WRAP,

are currently unsupported).

bordertype=IPL_BORDER_CONSTANT.

The function cvCopyMakeBorder copies the source 2D array into interior of destination array

and makes a border of the specified type around the copied area.

The function is useful when one needs to emulate border type that is different from the one embedded into a specific

algorithm implementation. For example, morphological functions, as well as most of other filtering functions in OpenCV,

internally use replication border type, while the user may need zero border or a border, filled with 1's or 255's.

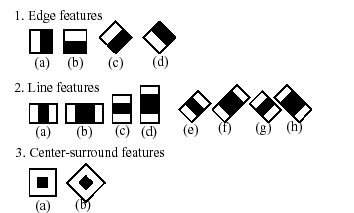

Calculates integral images

void cvIntegral( const CvArr* image, CvArr* sum, CvArr* sqsum=NULL, CvArr* tilted_sum=NULL );

W×H, 8-bit or floating-point (32f or 64f) image.

W+1×H+1, 32-bit integer or double precision floating-point (64f).

W+1×H+1, double precision floating-point (64f).

W+1×H+1, of the same data type as sum.

The function cvIntegral calculates one or more integral images for the source image as following:

sum(X,Y)=sumx<X,y<Yimage(x,y) sqsum(X,Y)=sumx<X,y<Yimage(x,y)2 tilted_sum(X,Y)=sumy<Y,abs(x-X)<yimage(x,y)

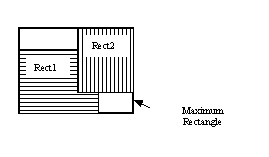

Using these integral images, one may calculate sum, mean, standard deviation over arbitrary up-right or rotated rectangular region of the image in a constant time, for example:

sumx1<=x<x2,y1<=y<y2image(x,y)=sum(x2,y2)-sum(x1,y2)-sum(x2,y1)+sum(x1,x1)

Using integral images it is possible to do variable-size image blurring, block correlation etc. In case of multi-channel input images the integral images must have the same number of channels, and every channel is processed independently.

Converts image from one color space to another

void cvCvtColor( const CvArr* src, CvArr* dst, int code );

The function cvCvtColor converts input image from one color space to another.

The function ignores colorModel and channelSeq fields of IplImage header,

so the source image color space should be specified correctly (including order of the channels in case

of RGB space, e.g. BGR means 24-bit format with B0 G0 R0 B1 G1 R1 ... layout,

whereas RGB means 24-bit format with R0 G0 B0 R1 G1 B1 ... layout).

The conventional range for R,G,B channel values is:

The function can do the following transformations:

RGB[A]->Gray: Y<-0.299*R + 0.587*G + 0.114*B Gray->RGB[A]: R<-Y G<-Y B<-Y A<-0

CV_BGR2XYZ, CV_RGB2XYZ, CV_XYZ2BGR, CV_XYZ2RGB):

|X| |0.412453 0.357580 0.180423| |R| |Y| <- |0.212671 0.715160 0.072169|*|G| |Z| |0.019334 0.119193 0.950227| |B| |R| | 3.240479 -1.53715 -0.498535| |X| |G| <- |-0.969256 1.875991 0.041556|*|Y| |B| | 0.055648 -0.204043 1.057311| |Z| X, Y and Z cover the whole value range (in case of floating-point images Z may exceed 1).

CV_BGR2YCrCb, CV_RGB2YCrCb, CV_YCrCb2BGR, CV_YCrCb2RGB)

Y <- 0.299*R + 0.587*G + 0.114*B

Cr <- (R-Y)*0.713 + delta

Cb <- (B-Y)*0.564 + delta

R <- Y + 1.403*(Cr - delta)

G <- Y - 0.344*(Cr - delta) - 0.714*(Cb - delta)

B <- Y + 1.773*(Cb - delta),

{ 128 for 8-bit images,

where delta = { 32768 for 16-bit images

{ 0.5 for floating-point images

Y, Cr and Cb cover the whole value range.

CV_BGR2HSV, CV_RGB2HSV, CV_HSV2BGR, CV_HSV2RGB)

// In case of 8-bit and 16-bit images

// R, G and B are converted to floating-point format and scaled to fit 0..1 range

V <- max(R,G,B)

S <- (V-min(R,G,B))/V if V≠0, 0 otherwise

(G - B)*60/S, if V=R

H <- 180+(B - R)*60/S, if V=G

240+(R - G)*60/S, if V=B

if H<0 then H<-H+360

On output 0≤V≤1, 0≤S≤1, 0≤H≤360.

The values are then converted to the destination data type:

8-bit images:

V <- V*255, S <- S*255, H <- H/2 (to fit to 0..255)

16-bit images (currently not supported):

V <- V*65535, S <- S*65535, H <- H

32-bit images:

H, S, V are left as is

CV_BGR2HLS, CV_RGB2HLS, CV_HLS2BGR, CV_HLS2RGB)

// In case of 8-bit and 16-bit images

// R, G and B are converted to floating-point format and scaled to fit 0..1 range

Vmax <- max(R,G,B)

Vmin <- min(R,G,B)

L <- (Vmax + Vmin)/2

S <- (Vmax - Vmin)/(Vmax + Vmin) if L < 0.5

(Vmax - Vmin)/(2 - (Vmax + Vmin)) if L ≥ 0.5

(G - B)*60/S, if Vmax=R

H <- 180+(B - R)*60/S, if Vmax=G

240+(R - G)*60/S, if Vmax=B

if H<0 then H<-H+360

On output 0≤L≤1, 0≤S≤1, 0≤H≤360.

The values are then converted to the destination data type:

8-bit images:

L <- L*255, S <- S*255, H <- H/2

16-bit images (currently not supported):

L <- L*65535, S <- S*65535, H <- H

32-bit images:

H, L, S are left as is

CV_BGR2Lab, CV_RGB2Lab, CV_Lab2BGR, CV_Lab2RGB)

// In case of 8-bit and 16-bit images

// R, G and B are converted to floating-point format and scaled to fit 0..1 range

// convert R,G,B to CIE XYZ

|X| |0.412453 0.357580 0.180423| |R|

|Y| <- |0.212671 0.715160 0.072169|*|G|

|Z| |0.019334 0.119193 0.950227| |B|

X <- X/Xn, where Xn = 0.950456

Z <- Z/Zn, where Zn = 1.088754

L <- 116*Y1/3 for Y>0.008856

L <- 903.3*Y for Y<=0.008856

a <- 500*(f(X)-f(Y)) + delta

b <- 200*(f(Y)-f(Z)) + delta

where f(t)=t1/3 for t>0.008856

f(t)=7.787*t+16/116 for t<=0.008856

where delta = 128 for 8-bit images,

0 for floating-point images

On output 0≤L≤100, -127≤a≤127, -127≤b≤127

The values are then converted to the destination data type:

8-bit images:

L <- L*255/100, a <- a + 128, b <- b + 128

16-bit images are currently not supported

32-bit images:

L, a, b are left as is

CV_BGR2Luv, CV_RGB2Luv, CV_Luv2BGR, CV_Luv2RGB)

// In case of 8-bit and 16-bit images

// R, G and B are converted to floating-point format and scaled to fit 0..1 range

// convert R,G,B to CIE XYZ

|X| |0.412453 0.357580 0.180423| |R|

|Y| <- |0.212671 0.715160 0.072169|*|G|

|Z| |0.019334 0.119193 0.950227| |B|

L <- 116*Y1/3-16 for Y>0.008856

L <- 903.3*Y for Y<=0.008856

u' <- 4*X/(X + 15*Y + 3*Z)

v' <- 9*Y/(X + 15*Y + 3*Z)

u <- 13*L*(u' - un), where un=0.19793943

v <- 13*L*(v' - vn), where vn=0.46831096

On output 0≤L≤100, -134≤u≤220, -140≤v≤122

The values are then converted to the destination data type:

8-bit images:

L <- L*255/100, u <- (u + 134)*255/354, v <- (v + 140)*255/256

16-bit images are currently not supported

32-bit images:

L, u, v are left as is

The above formulae for converting RGB to/from various color spaces have been taken

from multiple sources on Web, primarily from Color Space Conversions ([Ford98])

document at Charles Poynton site.

CV_BayerBG2BGR, CV_BayerGB2BGR, CV_BayerRG2BGR, CV_BayerGR2BGR,

CV_BayerBG2RGB, CV_BayerGB2RGB, CV_BayerRG2RGB, CV_BayerGR2RGB)

Bayer pattern is widely used in CCD and CMOS cameras. It allows to get color picture out of a single plane where R,G and B pixels (sensors of a particular component) are interleaved like this:

R |

G |

R |

G |

R |

G |

B |

G |

B |

G |

R |

G |

R |

G |

R |

G |

B |

G |

B |

G |

R |

G |

R |

G |

R |

G |

B |

G |

B |

G |

The output RGB components of a pixel are interpolated from 1, 2 or 4 neighbors of the pixel having the same color. There are several modifications of the above pattern that can be achieved by shifting the pattern one pixel left and/or one pixel up. The two letters C1 and C2 in the conversion constants CV_BayerC1C22{BGR|RGB} indicate the particular pattern type - these are components from the second row, second and third columns, respectively. For example, the above pattern has very popular "BG" type.

Applies fixed-level threshold to array elements

double cvThreshold( const CvArr* src, CvArr* dst, double threshold,

double max_value, int threshold_type );

src or 8-bit.

CV_THRESH_BINARY and

CV_THRESH_BINARY_INV thresholding types.

The function cvThreshold applies fixed-level thresholding to single-channel array.

The function is typically used to get bi-level (binary) image out of grayscale image

(cvCmpS

could be also used for this purpose) or for removing a noise, i.e. filtering out pixels with too small or too large values.

There are several types of thresholding the function supports that are determined by threshold_type:

threshold_type=CV_THRESH_BINARY:

dst(x,y) = max_value, if src(x,y)>threshold

0, otherwise

threshold_type=CV_THRESH_BINARY_INV:

dst(x,y) = 0, if src(x,y)>threshold

max_value, otherwise

threshold_type=CV_THRESH_TRUNC:

dst(x,y) = threshold, if src(x,y)>threshold

src(x,y), otherwise

threshold_type=CV_THRESH_TOZERO:

dst(x,y) = src(x,y), if src(x,y)>threshold

0, otherwise

threshold_type=CV_THRESH_TOZERO_INV:

dst(x,y) = 0, if src(x,y)>threshold

src(x,y), otherwise

And this is the visual description of thresholding types:

CV_THRESH_OTSU may be combined with

one of the above values. In this case the function determines the optimal threshold

value using Otsu algorithm and uses it instead of the specified thresh.

The function returns the computed threshold value.

Currently, Otsu method is implemented only for 8-bit images.

Applies adaptive threshold to array

void cvAdaptiveThreshold( const CvArr* src, CvArr* dst, double max_value,

int adaptive_method=CV_ADAPTIVE_THRESH_MEAN_C,

int threshold_type=CV_THRESH_BINARY,

int block_size=3, double param1=5 );

CV_THRESH_BINARY and CV_THRESH_BINARY_INV.

CV_ADAPTIVE_THRESH_MEAN_C

or CV_ADAPTIVE_THRESH_GAUSSIAN_C (see the discussion).

CV_THRESH_BINARY,

CV_THRESH_BINARY_INV

CV_ADAPTIVE_THRESH_MEAN_C and CV_ADAPTIVE_THRESH_GAUSSIAN_C

it is a constant subtracted from mean or weighted mean (see the discussion), though it may be negative.

The function cvAdaptiveThreshold transforms grayscale image to binary image according to

the formulae:

threshold_type=CV_THRESH_BINARY: dst(x,y) = max_value, if src(x,y)>T(x,y) 0, otherwise threshold_type=CV_THRESH_BINARY_INV: dst(x,y) = 0, if src(x,y)>T(x,y) max_value, otherwise

where TI is a threshold calculated individually for each pixel.

For the method CV_ADAPTIVE_THRESH_MEAN_C it is a mean of block_size × block_size

pixel neighborhood, subtracted by param1.

For the method CV_ADAPTIVE_THRESH_GAUSSIAN_C it is a weighted sum (Gaussian) of

block_size × block_size pixel neighborhood, subtracted by param1.

Downsamples image

void cvPyrDown( const CvArr* src, CvArr* dst, int filter=CV_GAUSSIAN_5x5 );

CV_GAUSSIAN_5x5 is

currently supported.

The function cvPyrDown performs downsampling step of Gaussian pyramid

decomposition. First it convolves source image with the specified filter and

then downsamples the image by rejecting even rows and columns.

Upsamples image

void cvPyrUp( const CvArr* src, CvArr* dst, int filter=CV_GAUSSIAN_5x5 );

CV_GAUSSIAN_5x5 is

currently supported.

The function cvPyrUp performs up-sampling step of Gaussian pyramid decomposition.

First it upsamples the source image by injecting even zero rows and columns and

then convolves result with the specified filter multiplied by 4 for

interpolation. So the destination image is four times larger than the source

image.

Connected component

typedef struct CvConnectedComp

{

double area; /* area of the segmented component */

float value; /* gray scale value of the segmented component */

CvRect rect; /* ROI of the segmented component */

} CvConnectedComp;

Fills a connected component with given color

void cvFloodFill( CvArr* image, CvPoint seed_point, CvScalar new_val,

CvScalar lo_diff=cvScalarAll(0), CvScalar up_diff=cvScalarAll(0),

CvConnectedComp* comp=NULL, int flags=4, CvArr* mask=NULL );

#define CV_FLOODFILL_FIXED_RANGE (1 << 16)

#define CV_FLOODFILL_MASK_ONLY (1 << 17)

new_val is ignored),

but the fills mask (that must be non-NULL in this case).

image. If not NULL, the function uses and updates the mask, so user takes responsibility of

initializing mask content. Floodfilling can't go across

non-zero pixels in the mask, for example, an edge detector output can be used as a mask

to stop filling at edges. Or it is possible to use the same mask in multiple calls to the function

to make sure the filled area do not overlap. Note: because mask is larger than the filled image,

pixel in mask that corresponds to (x,y) pixel in image

will have coordinates (x+1,y+1).

The function cvFloodFill fills a connected component starting from the seed point

with the specified color. The connectivity is determined by the closeness of pixel values.

The pixel at (x, y) is considered to belong to the repainted domain if:

src(x',y')-lo_diff<=src(x,y)<=src(x',y')+up_diff, grayscale image, floating range src(seed.x,seed.y)-lo<=src(x,y)<=src(seed.x,seed.y)+up_diff, grayscale image, fixed range src(x',y')r-lo_diffr<=src(x,y)r<=src(x',y')r+up_diffr and src(x',y')g-lo_diffg<=src(x,y)g<=src(x',y')g+up_diffg and src(x',y')b-lo_diffb<=src(x,y)b<=src(x',y')b+up_diffb, color image, floating range src(seed.x,seed.y)r-lo_diffr<=src(x,y)r<=src(seed.x,seed.y)r+up_diffr and src(seed.x,seed.y)g-lo_diffg<=src(x,y)g<=src(seed.x,seed.y)g+up_diffg and src(seed.x,seed.y)b-lo_diffb<=src(x,y)b<=src(seed.x,seed.y)b+up_diffb, color image, fixed rangewhere

src(x',y') is value of one of pixel neighbors.

That is, to be added to the connected component, a pixel’s color/brightness should be close enough to:

Finds contours in binary image

int cvFindContours( CvArr* image, CvMemStorage* storage, CvSeq** first_contour,

int header_size=sizeof(CvContour), int mode=CV_RETR_LIST,

int method=CV_CHAIN_APPROX_SIMPLE, CvPoint offset=cvPoint(0,0) );

binary. To get such a binary image

from grayscale, one may use cvThreshold, cvAdaptiveThreshold or cvCanny.

The function modifies the source image content.

method=CV_CHAIN_CODE,

and >=sizeof(CvContour) otherwise.

CV_RETR_EXTERNAL - retrieve only the extreme outer contours

CV_RETR_LIST - retrieve all the contours and puts them in the list

CV_RETR_CCOMP - retrieve all the contours and organizes them into two-level hierarchy:

top level are external boundaries of the components, second level are

boundaries of the holes

CV_RETR_TREE - retrieve all the contours and reconstructs the full hierarchy of

nested contours

CV_RETR_RUNS, which uses

built-in approximation).

CV_CHAIN_CODE - output contours in the Freeman chain code. All other methods output polygons

(sequences of vertices).

CV_CHAIN_APPROX_NONE - translate all the points from the chain code into

points;

CV_CHAIN_APPROX_SIMPLE - compress horizontal, vertical, and diagonal segments,

that is, the function leaves only their ending points;

CV_CHAIN_APPROX_TC89_L1,CV_CHAIN_APPROX_TC89_KCOS - apply one of the flavors of

Teh-Chin chain approximation algorithm.

CV_LINK_RUNS - use completely different contour retrieval algorithm via

linking of horizontal segments of 1’s. Only CV_RETR_LIST retrieval mode can be used

with this method.

- offset

- Offset, by which every contour point is shifted. This is useful if the contours are extracted from

the image ROI and then they should be analyzed in the whole image context.

The function cvFindContours retrieves contours from the binary image and returns

the number of retrieved contours. The pointer first_contour is filled by the function.

It will contain pointer to the first most outer contour or NULL if no contours is detected (if the image is completely black).

Other contours may be reached from first_contour using h_next and v_next links.

The sample in cvDrawContours discussion shows how to use contours for connected component

detection. Contours can be also used for shape analysis and object recognition - see squares.c

in OpenCV sample directory.

StartFindContours

Initializes contour scanning process

CvContourScanner cvStartFindContours( CvArr* image, CvMemStorage* storage,

int header_size=sizeof(CvContour),

int mode=CV_RETR_LIST,

int method=CV_CHAIN_APPROX_SIMPLE,

CvPoint offset=cvPoint(0,0) );

- image

- The source 8-bit single channel binary image.

- storage

- Container of the retrieved contours.

- header_size

- Size of the sequence header, >=sizeof(CvChain) if

method=CV_CHAIN_CODE,

and >=sizeof(CvContour) otherwise.

- mode

- Retrieval mode; see cvFindContours.

- method

- Approximation method. It has the same meaning as in cvFindContours,

but CV_LINK_RUNS can not be used here.

- offset

- ROI offset; see cvFindContours.

The function cvStartFindContours initializes and returns pointer to the contour

scanner. The scanner is used further in cvFindNextContour to retrieve the rest of contours.

FindNextContour

Finds next contour in the image

CvSeq* cvFindNextContour( CvContourScanner scanner );

- scanner

- Contour scanner initialized by The function

cvStartFindContours .

The function cvFindNextContour locates and retrieves the next contour in the image and

returns pointer to it. The function returns NULL, if there is no more contours.

SubstituteContour

Replaces retrieved contour

void cvSubstituteContour( CvContourScanner scanner, CvSeq* new_contour );

- scanner

- Contour scanner initialized by the function cvStartFindContours .

- new_contour

- Substituting contour.

The function cvSubstituteContour replaces the retrieved contour, that was returned

from the preceding call of The function cvFindNextContour and stored inside

the contour scanner state, with the user-specified contour. The contour is

inserted into the resulting structure, list, two-level hierarchy, or tree,

depending on the retrieval mode. If the parameter new_contour=NULL, the retrieved

contour is not included into the resulting structure, nor all of its children

that might be added to this structure later.

EndFindContours

Finishes scanning process

CvSeq* cvEndFindContours( CvContourScanner* scanner );

- scanner

- Pointer to the contour scanner.

The function cvEndFindContours finishes the scanning process and returns the

pointer to the first contour on the highest level.

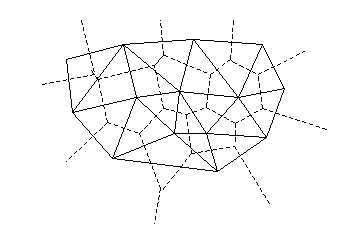

PyrSegmentation

Does image segmentation by pyramids

void cvPyrSegmentation( IplImage* src, IplImage* dst,

CvMemStorage* storage, CvSeq** comp,

int level, double threshold1, double threshold2 );

- src

- The source image.

- dst

- The destination image.

- storage

- Storage; stores the resulting sequence of connected components.

- comp

- Pointer to the output sequence of the segmented components.

- level

- Maximum level of the pyramid for the segmentation.

- threshold1

- Error threshold for establishing the links.

- threshold2

- Error threshold for the segments clustering.

The function cvPyrSegmentation implements image segmentation by pyramids. The

pyramid builds up to the level level. The links between any pixel a on level i

and its candidate father pixel b on the adjacent level are established if

p(c(a),c(b))<threshold1.

After the connected components are defined, they are joined into several

clusters. Any two segments A and B belong to the same cluster, if

p(c(A),c(B))<threshold2. The input

image has only one channel, then

p(c¹,c²)=|c¹-c²|. If the input image has three channels (red,

green and blue), then

p(c¹,c²)=0,3·(c¹r-c²r)+0,59·(c¹g-c²g)+0,11·(c¹b-c²b) .

There may be more than one connected component per a cluster.

The images src and dst should be 8-bit single-channel or 3-channel images

or equal size

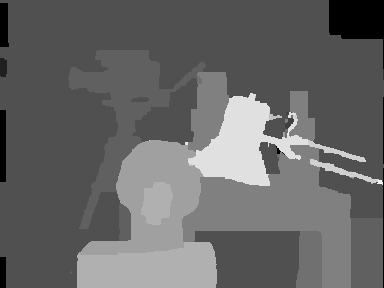

PyrMeanShiftFiltering

Does MeanShift image segmentation

void cvPyrMeanShiftFiltering( const CvArr* src, CvArr* dst,

double sp, double sr, int max_level=1,

CvTermCriteria termcrit=cvTermCriteria(CV_TERMCRIT_ITER+CV_TERMCRIT_EPS,5,1));

- src

- The source 8-bit 3-channel image.

- dst

- The destination image of the same format and the same size as the source.

- sp

- The spatial window radius.

- sr

- The color window radius.

- max_level

- Maximum level of the pyramid for the segmentation.

- termcrit

- Termination criteria: when to stop meanshift iterations.

The function cvPyrMeanShiftFiltering implements the filtering stage of meanshift

segmentation, that is, the output of the function is the filtered "posterized" image with

color gradients and fine-grain texture flattened. At every pixel (X,Y) of the

input image (or down-sized input image, see below) the function executes meanshift iterations,

that is, the pixel (X,Y) neighborhood in the joint space-color

hyper-space is considered:

{(x,y): X-sp≤x≤X+sp && Y-sp≤y≤Y+sp && ||(R,G,B)-(r,g,b)|| ≤ sr},

where (R,G,B) and (r,g,b) are the vectors of color components

at (X,Y) and (x,y), respectively (though, the algorithm does not depend

on the color space used, so any 3-component color space can be used instead).

Over the neighborhood the average spatial value (X',Y') and average color vector

(R',G',B') are found and they act as the neighborhood center on the next iteration:

(X,Y)~(X',Y'), (R,G,B)~(R',G',B').

After the iterations over, the color components of the initial pixel

(that is, the pixel from where the iterations started)

are set to the final value (average color at the last iteration):

I(X,Y) <- (R*,G*,B*).

Then max_level>0, the Gaussian pyramid of max_level+1 levels is built,

and the above procedure is run on the smallest layer. After that, the results are propagated to the

larger layer and the iterations are run again only on those pixels where the layer colors

differ much (>sr) from the lower-resolution layer, that is,

the boundaries of the color regions are clarified. Note, that the results

will be actually different from the ones obtained by running the meanshift procedure

on the whole original image (i.e. when max_level==0).

Watershed

Does watershed segmentation

void cvWatershed( const CvArr* image, CvArr* markers );

- image

- The input 8-bit 3-channel image.

- markers

- The input/output 32-bit single-channel image (map) of markers.

The function cvWatershed implements one of the variants of watershed,

non-parametric marker-based segmentation algorithm, described in [Meyer92]

Before passing the image to the function, user has to outline roughly the desired regions

in the image markers with positive (>0) indices, i.e. every region is represented as one or more

connected components with the pixel values 1, 2, 3 etc. Those components will be "seeds" of the

future image regions.

All the other pixels in markers, which relation to the outlined regions is not known

and should be defined by the algorithm, should be set to 0's. On the output of the function,

each pixel in markers is set to one of values of the "seed" components, or to -1

at boundaries between the regions.

Note, that it is not necessary that every

two neighbor connected components

are separated by a watershed boundary (-1's pixels), for example, in case when such tangent components

exist in the initial marker image. Visual demonstration and usage example of the function can be

found in OpenCV samples directory; see watershed.cpp demo.

Image and Contour moments

Moments

Calculates all moments up to third order of a polygon or rasterized shape

void cvMoments( const CvArr* arr, CvMoments* moments, int binary=0 );

- arr

- Image (1-channel or 3-channel with COI set)

or polygon (CvSeq of points or a vector of points).

- moments

- Pointer to returned moment state structure.

- binary

- (For images only) If the flag is non-zero, all the zero pixel values are treated as

zeroes, all the others are treated as 1’s.

The function cvMoments calculates spatial and central moments up to the third order and

writes them to moments. The moments may be used then to calculate gravity center of the shape,

its area, main axises and various shape characteristics including 7 Hu invariants.

GetSpatialMoment

Retrieves spatial moment from moment state structure

double cvGetSpatialMoment( CvMoments* moments, int x_order, int y_order );

- moments

- The moment state, calculated by cvMoments.

- x_order

- x order of the retrieved moment,

x_order >= 0.

- y_order

- y order of the retrieved moment,

y_order >= 0 and x_order + y_order <= 3.

The function cvGetSpatialMoment retrieves the spatial moment, which in case of

image moments is defined as:

Mx_order,y_order=sumx,y(I(x,y)•xx_order•yy_order)

where I(x,y) is the intensity of the pixel (x, y).

GetCentralMoment

Retrieves central moment from moment state structure

double cvGetCentralMoment( CvMoments* moments, int x_order, int y_order );

- moments

- Pointer to the moment state structure.

- x_order

- x order of the retrieved moment,

x_order >= 0.

- y_order

- y order of the retrieved moment,

y_order >= 0 and x_order + y_order <= 3.

The function cvGetCentralMoment retrieves the central moment, which in case of

image moments is defined as:

μx_order,y_order=sumx,y(I(x,y)•(x-xc)x_order•(y-yc)y_order),

where xc=M10/M00, yc=M01/M00 - coordinates of the gravity center

GetNormalizedCentralMoment

Retrieves normalized central moment from moment state structure

double cvGetNormalizedCentralMoment( CvMoments* moments, int x_order, int y_order );

- moments

- Pointer to the moment state structure.

- x_order

- x order of the retrieved moment,

x_order >= 0.

- y_order

- y order of the retrieved moment,

y_order >= 0 and x_order + y_order <= 3.

The function cvGetNormalizedCentralMoment

retrieves the normalized central moment:

ηx_order,y_order= μx_order,y_order/M00((y_order+x_order)/2+1)

GetHuMoments

Calculates seven Hu invariants

void cvGetHuMoments( CvMoments* moments, CvHuMoments* hu_moments );

- moments

- Pointer to the moment state structure.

- hu_moments

- Pointer to Hu moments structure.

The function cvGetHuMoments calculates seven Hu invariants that are defined as:

h1=η20+η02

h2=(η20-η02)²+4η11²

h3=(η30-3η12)²+ (3η21-η03)²

h4=(η30+η12)²+ (η21+η03)²

h5=(η30-3η12)(η30+η12)[(η30+η12)²-3(η21+η03)²]+(3η21-η03)(η21+η03)[3(η30+η12)²-(η21+η03)²]

h6=(η20-η02)[(η30+η12)²- (η21+η03)²]+4η11(η30+η12)(η21+η03)

h7=(3η21-η03)(η21+η03)[3(η30+η12)²-(η21+η03)²]-(η30-3η12)(η21+η03)[3(η30+η12)²-(η21+η03)²]

where ηi,j are normalized central moments of

2-nd and 3-rd orders.

The computed values are proved to be invariant to the image scaling, rotation, and

reflection except the seventh one, whose sign is changed by reflection.

Special Image Transforms

HoughLines2

Finds lines in binary image using Hough transform

CvSeq* cvHoughLines2( CvArr* image, void* line_storage, int method,

double rho, double theta, int threshold,

double param1=0, double param2=0 );

- image

- The input 8-bit single-channel binary image. In case of probabilistic method the image is

modified by the function.

- line_storage

- The storage for the lines detected. It can be a memory storage (in this case

a sequence of lines is created in the storage and returned by the function) or single row/single column

matrix (CvMat*) of a particular type (see below) to which the lines' parameters are written.

The matrix header is modified by the function so its

cols or rows will contain

a number of lines detected. If line_storage is a matrix and the actual number of lines

exceeds the matrix size, the maximum possible number of lines is returned

(in case of standard hough transform the lines are sorted by the accumulator value).

- method

- The Hough transform variant, one of:

CV_HOUGH_STANDARD - classical or standard Hough transform. Every line is represented by two floating-point numbers

(ρ, θ), where ρ is a distance between (0,0) point and the line, and θ is the angle

between x-axis and the normal to the line. Thus, the matrix must be (the created sequence will

be) of CV_32FC2 type.

CV_HOUGH_PROBABILISTIC - probabilistic Hough transform (more efficient in case if picture contains

a few long linear segments). It returns line segments rather than the whole lines.

Every segment is represented by starting and ending points, and the matrix must be

(the created sequence will be) of CV_32SC4 type.

CV_HOUGH_MULTI_SCALE - multi-scale variant of classical Hough transform.

The lines are encoded the same way as in CV_HOUGH_STANDARD.

- rho

- Distance resolution in pixel-related units.

- theta

- Angle resolution measured in radians.

- threshold

- Threshold parameter. A line is returned by the function if the corresponding

accumulator value is greater than

threshold.

- param1

- The first method-dependent parameter:

- For classical Hough transform it is not used (0).

- For probabilistic Hough transform it is the minimum line length.

- For multi-scale Hough transform it is divisor for distance resolution

rho.

(The coarse distance resolution will be rho and the accurate resolution will be (rho / param1)).

- param2

- The second method-dependent parameter:

- For classical Hough transform it is not used (0).

- For probabilistic Hough transform it is the maximum gap between line segments lying on the same line to

treat them as the single line segment (i.e. to join them).

- For multi-scale Hough transform it is divisor for angle resolution

theta.

(The coarse angle resolution will be theta and the accurate resolution will be (theta / param2)).

The function cvHoughLines2 implements a few variants of Hough transform for line detection.

Example. Detecting lines with Hough transform.

/* This is a stand-alone program. Pass an image name as a first parameter of the program.

Switch between standard and probabilistic Hough transform by changing "#if 1" to "#if 0" and back */

#include <cv.h>

#include <highgui.h>

#include <math.h>

int main(int argc, char** argv)

{

IplImage* src;

if( argc == 2 && (src=cvLoadImage(argv[1], 0))!= 0)

{

IplImage* dst = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* color_dst = cvCreateImage( cvGetSize(src), 8, 3 );

CvMemStorage* storage = cvCreateMemStorage(0);

CvSeq* lines = 0;

int i;

cvCanny( src, dst, 50, 200, 3 );

cvCvtColor( dst, color_dst, CV_GRAY2BGR );

#if 1

lines = cvHoughLines2( dst, storage, CV_HOUGH_STANDARD, 1, CV_PI/180, 100, 0, 0 );

for( i = 0; i < MIN(lines->total,100); i++ )

{

float* line = (float*)cvGetSeqElem(lines,i);

float rho = line[0];

float theta = line[1];

CvPoint pt1, pt2;

double a = cos(theta), b = sin(theta);

double x0 = a*rho, y0 = b*rho;

pt1.x = cvRound(x0 + 1000*(-b));

pt1.y = cvRound(y0 + 1000*(a));

pt2.x = cvRound(x0 - 1000*(-b));

pt2.y = cvRound(y0 - 1000*(a));

cvLine( color_dst, pt1, pt2, CV_RGB(255,0,0), 3, 8 );

}

#else

lines = cvHoughLines2( dst, storage, CV_HOUGH_PROBABILISTIC, 1, CV_PI/180, 50, 50, 10 );

for( i = 0; i < lines->total; i++ )

{

CvPoint* line = (CvPoint*)cvGetSeqElem(lines,i);

cvLine( color_dst, line[0], line[1], CV_RGB(255,0,0), 3, 8 );

}

#endif

cvNamedWindow( "Source", 1 );

cvShowImage( "Source", src );

cvNamedWindow( "Hough", 1 );

cvShowImage( "Hough", color_dst );

cvWaitKey(0);

}

}

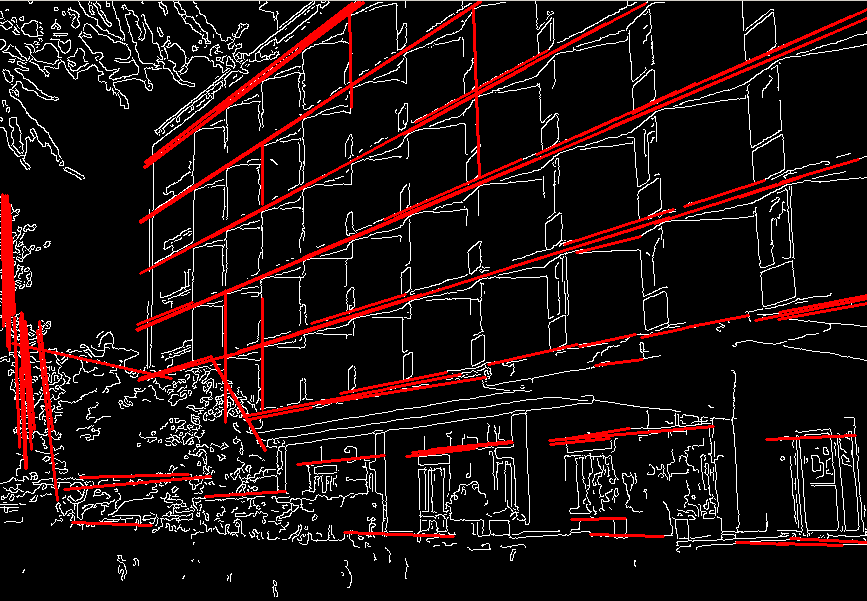

This is the sample picture the function parameters have been tuned for:

And this is the output of the above program in case of probabilistic Hough transform ("#if 0" case):

HoughCircles

Finds circles in grayscale image using Hough transform

CvSeq* cvHoughCircles( CvArr* image, void* circle_storage,

int method, double dp, double min_dist,

double param1=100, double param2=100,

int min_radius=0, int max_radius=0 );

- image

- The input 8-bit single-channel grayscale image.

- circle_storage

- The storage for the circles detected. It can be a memory storage (in this case

a sequence of circles is created in the storage and returned by the function) or single row/single column

matrix (CvMat*) of type CV_32FC3, to which the circles' parameters are written.

The matrix header is modified by the function so its

cols or rows will contain

a number of lines detected. If circle_storage is a matrix and the actual number of lines

exceeds the matrix size, the maximum possible number of circles is returned.

Every circle is encoded as 3 floating-point numbers: center coordinates (x,y) and the radius.

- method

- Currently, the only implemented method is

CV_HOUGH_GRADIENT, which is basically 21HT, described in

[Yuen03].

- dp

- Resolution of the accumulator used to detect centers of the circles. For example, if it is 1,

the accumulator will have the same resolution as the input image, if it is 2 - accumulator will have twice

smaller width and height, etc.

- min_dist

- Minimum distance between centers of the detected circles. If the parameter is too small, multiple

neighbor circles may be falsely detected in addition to a true one. If it is too large, some circles may be missed.

- param1

- The first method-specific parameter.

In case of

CV_HOUGH_GRADIENT it is the higher threshold of the two passed to Canny edge detector

(the lower one will be twice smaller).

- param2

- The second method-specific parameter.

In case of

CV_HOUGH_GRADIENT it is accumulator threshold at the center detection stage.

The smaller it is, the more false circles may be detected. Circles, corresponding to the larger accumulator

values, will be returned first.

- min_radius

- Minimal radius of the circles to search for.

- max_radius

- Maximal radius of the circles to search for.

By default the maximal radius is set to

max(image_width, image_height).

The function cvHoughCircles finds circles in grayscale image using some modification of Hough transform.

Example. Detecting circles with Hough transform.

#include <cv.h>

#include <highgui.h>

#include <math.h>

int main(int argc, char** argv)

{

IplImage* img;

if( argc == 2 && (img=cvLoadImage(argv[1], 1))!= 0)

{

IplImage* gray = cvCreateImage( cvGetSize(img), 8, 1 );

CvMemStorage* storage = cvCreateMemStorage(0);

cvCvtColor( img, gray, CV_BGR2GRAY );

cvSmooth( gray, gray, CV_GAUSSIAN, 9, 9 ); // smooth it, otherwise a lot of false circles may be detected

CvSeq* circles = cvHoughCircles( gray, storage, CV_HOUGH_GRADIENT, 2, gray->height/4, 200, 100 );

int i;

for( i = 0; i < circles->total; i++ )

{

float* p = (float*)cvGetSeqElem( circles, i );

cvCircle( img, cvPoint(cvRound(p[0]),cvRound(p[1])), 3, CV_RGB(0,255,0), -1, 8, 0 );

cvCircle( img, cvPoint(cvRound(p[0]),cvRound(p[1])), cvRound(p[2]), CV_RGB(255,0,0), 3, 8, 0 );

}

cvNamedWindow( "circles", 1 );

cvShowImage( "circles", img );

}

return 0;

}

DistTransform

Calculates distance to closest zero pixel for all non-zero pixels of source

image

void cvDistTransform( const CvArr* src, CvArr* dst, int distance_type=CV_DIST_L2,

int mask_size=3, const float* mask=NULL, CvArr* labels=NULL );

- src

- Source 8-bit single-channel (binary) image.

- dst

- Output image with calculated distances.

In most cases it should be 32-bit floating-point, single-channel array of

the same size as the input image.

When

distance_type==CV_DIST_L1, 8-bit, single-channel

destination array may be also used (in-place operation is also supported in this case).

- distance_type

- Type of distance; can be

CV_DIST_L1, CV_DIST_L2, CV_DIST_C or

CV_DIST_USER.

- mask_size

- Size of distance transform mask; can be 3, 5 or 0. In case of

CV_DIST_L1 or

CV_DIST_C the parameter is forced to 3, because 3×3 mask gives the same result

as 5×5 yet it is faster. When mask_size==0, a different non-approximate algorithm

is used to calculate distances.

- mask

- User-defined mask in case of user-defined distance, it consists of 2 numbers

(horizontal/vertical shift cost, diagonal shift cost) in case of 3×3 mask and

3 numbers (horizontal/vertical shift cost, diagonal shift cost, knight’s move cost)

in case of 5×5 mask.

- labels

- The optional output 2d array of labels of integer type

and the same size as

src and dst, can now be used only with

mask_size==3 or 5.

The function cvDistTransform calculates the approximated or exact distance from every binary image pixel

to the nearest zero pixel. When mask_size==0, the function uses the accurate algorithm

[Felzenszwalb04]. When mask_size==3 or 5, the function

uses the approximate algorithm [Borgefors86].

Here is how the approximate

algorithm works. For zero pixels the function sets the zero distance. For others it finds

the shortest path to a zero pixel, consisting of basic shifts: horizontal, vertical, diagonal or knight’s move (the

latest is available for 5×5 mask). The overall distance is calculated as a sum of these basic distances.

Because the distance function should be symmetric, all the horizontal and vertical shifts must have

the same cost (that is denoted as a), all the diagonal shifts must have the same cost

(denoted b), and all knight’s moves must have the same cost (denoted c).

For CV_DIST_C and CV_DIST_L1 types the distance is calculated precisely,

whereas for CV_DIST_L2 (Euclidean distance) the distance can be calculated only with

some relative error (5×5 mask gives more accurate results), OpenCV uses the values suggested in

[Borgefors86]:

CV_DIST_C (3×3):

a=1, b=1

CV_DIST_L1 (3×3):

a=1, b=2

CV_DIST_L2 (3×3):

a=0.955, b=1.3693

CV_DIST_L2 (5×5):

a=1, b=1.4, c=2.1969

And below are samples of distance field (black (0) pixel is in the middle of white square)

in case of user-defined distance:

User-defined 3×3 mask (a=1, b=1.5)

4.5 4 3.5 3 3.5 4 4.5 4 3 2.5 2 2.5 3 4 3.5 2.5 1.5 1 1.5 2.5 3.5 3 2 1 0 1 2 3 3.5 2.5 1.5 1 1.5 2.5 3.5 4 3 2.5 2 2.5 3 4 4.5 4 3.5 3 3.5 4 4.5

User-defined 5×5 mask (a=1, b=1.5, c=2)

4.5 3.5 3 3 3 3.5 4.5 3.5 3 2 2 2 3 3.5 3 2 1.5 1 1.5 2 3 3 2 1 0 1 2 3 3 2 1.5 1 1.5 2 3 3.5 3 2 2 2 3 3.5 4 3.5 3 3 3 3.5 4

Typically, for fast coarse distance estimation CV_DIST_L2, 3×3 mask is used,

and for more accurate distance estimation CV_DIST_L2, 5×5 mask is used.

When the output parameter labels is not NULL, for every non-zero pixel

the function also finds the nearest connected component consisting of zero pixels. The connected components

themselves are found as contours in the beginning of the function.

In this mode the processing time is still O(N), where N is the number of pixels.

Thus, the function provides a very fast way to compute approximate Voronoi diagram for the binary image.

Inpaint

Inpaints the selected region in the image

void cvInpaint( const CvArr* src, const CvArr* mask, CvArr* dst,

int flags, double inpaintRadius );

- src

- The input 8-bit 1-channel or 3-channel image.

- mask

- The inpainting mask, 8-bit 1-channel image.

Non-zero pixels indicate the area that needs to be inpainted.

- dst

- The output image of the same format and the same size as input.

- flags

- The inpainting method, one of the following:

CV_INPAINT_NS - Navier-Stokes based method.

CV_INPAINT_TELEA - The method by Alexandru Telea [Telea04]

- inpaintRadius

- The radius of circular neighborhood of each point inpainted that is considered by the algorithm.

The function cvInpaint reconstructs the selected image area from the pixel near the

area boundary. The function may be used to remove dust and scratches from a scanned photo, or

to remove undesirable objects from still images or video.

Histograms

CvHistogram

Multi-dimensional histogram

typedef struct CvHistogram

{

int type;

CvArr* bins;

float thresh[CV_MAX_DIM][2]; /* for uniform histograms */

float** thresh2; /* for non-uniform histograms */

CvMatND mat; /* embedded matrix header for array histograms */

}

CvHistogram;

CreateHist

Creates histogram

CvHistogram* cvCreateHist( int dims, int* sizes, int type,

float** ranges=NULL, int uniform=1 );

- dims

- Number of histogram dimensions.

- sizes

- Array of histogram dimension sizes.

- type

- Histogram representation format:

CV_HIST_ARRAY means that histogram data is

represented as an multi-dimensional dense array CvMatND;

CV_HIST_SPARSE means that histogram data is represented

as a multi-dimensional sparse array CvSparseMat.

- ranges

- Array of ranges for histogram bins. Its meaning depends on the

uniform parameter value.

The ranges are used for when histogram is calculated or back-projected to determine, which histogram bin

corresponds to which value/tuple of values from the input image[s].

- uniform

- Uniformity flag; if not 0, the histogram has evenly spaced bins and

for every

0<=i<cDims ranges[i] is array of two numbers: lower and upper

boundaries for the i-th histogram dimension. The whole range [lower,upper] is split then

into dims[i] equal parts to determine i-th input tuple value ranges for every histogram bin.

And if uniform=0, then i-th element of ranges array contains dims[i]+1 elements:

lower0, upper0, lower1, upper1 == lower2, ..., upperdims[i]-1,

where lowerj and upperj are lower and upper

boundaries of i-th input tuple value for j-th bin, respectively.

In either case, the input values that are beyond the specified range for a histogram bin, are not

counted by cvCalcHist and filled with 0 by cvCalcBackProject.

The function cvCreateHist creates a histogram of the specified size and returns

the pointer to the created histogram. If the array ranges is 0, the histogram

bin ranges must be specified later via The function cvSetHistBinRanges, though

cvCalcHist and cvCalcBackProject may process 8-bit images without setting

bin ranges, they assume equally spaced in 0..255 bins.

SetHistBinRanges

Sets bounds of histogram bins

void cvSetHistBinRanges( CvHistogram* hist, float** ranges, int uniform=1 );

- hist

- Histogram.

- ranges

- Array of bin ranges arrays, see cvCreateHist.

- uniform

- Uniformity flag, see cvCreateHist.

The function cvSetHistBinRanges is a stand-alone function for setting bin ranges

in the histogram. For more detailed description of the parameters ranges and

uniform see cvCalcHist function,

that can initialize the ranges as well.

Ranges for histogram bins must be set before the histogram is calculated or

back projection of the histogram is calculated.

ReleaseHist

Releases histogram

void cvReleaseHist( CvHistogram** hist );

- hist

- Double pointer to the released histogram.

The function cvReleaseHist releases the histogram (header and the data).

The pointer to histogram is cleared by the function. If *hist pointer is already

NULL, the function does nothing.

ClearHist

Clears histogram

void cvClearHist( CvHistogram* hist );

- hist

- Histogram.

The function cvClearHist sets all histogram bins to 0 in case of dense histogram and

removes all histogram bins in case of sparse array.

MakeHistHeaderForArray

Makes a histogram out of array

CvHistogram* cvMakeHistHeaderForArray( int dims, int* sizes, CvHistogram* hist,

float* data, float** ranges=NULL, int uniform=1 );

- dims

- Number of histogram dimensions.

- sizes

- Array of histogram dimension sizes.

- hist

- The histogram header initialized by the function.

- data

- Array that will be used to store histogram bins.

- ranges

- Histogram bin ranges, see cvCreateHist.

- uniform

- Uniformity flag, see cvCreateHist.

The function cvMakeHistHeaderForArray initializes the histogram, which header and

bins are allocated by user. No cvReleaseHist need to be called afterwards.

Only dense histograms can be initialized this way. The function returns hist.

QueryHistValue_*D

Queries value of histogram bin

#define cvQueryHistValue_1D( hist, idx0 ) \

cvGetReal1D( (hist)->bins, (idx0) )

#define cvQueryHistValue_2D( hist, idx0, idx1 ) \

cvGetReal2D( (hist)->bins, (idx0), (idx1) )

#define cvQueryHistValue_3D( hist, idx0, idx1, idx2 ) \

cvGetReal3D( (hist)->bins, (idx0), (idx1), (idx2) )

#define cvQueryHistValue_nD( hist, idx ) \

cvGetRealND( (hist)->bins, (idx) )

- hist

- Histogram.

- idx0, idx1, idx2, idx3

- Indices of the bin.

- idx

- Array of indices

The macros cvQueryHistValue_*D return the value of the specified bin of 1D, 2D, 3D or

N-D histogram. In case of sparse histogram the function returns 0, if the bin is not present in the

histogram, and no new bin is created.

GetHistValue_*D

Returns pointer to histogram bin

#define cvGetHistValue_1D( hist, idx0 ) \

((float*)(cvPtr1D( (hist)->bins, (idx0), 0 ))

#define cvGetHistValue_2D( hist, idx0, idx1 ) \

((float*)(cvPtr2D( (hist)->bins, (idx0), (idx1), 0 ))

#define cvGetHistValue_3D( hist, idx0, idx1, idx2 ) \

((float*)(cvPtr3D( (hist)->bins, (idx0), (idx1), (idx2), 0 ))

#define cvGetHistValue_nD( hist, idx ) \

((float*)(cvPtrND( (hist)->bins, (idx), 0 ))

- hist

- Histogram.

- idx0, idx1, idx2, idx3

- Indices of the bin.

- idx

- Array of indices

The macros cvGetHistValue_*D return pointer to the specified bin of 1D, 2D, 3D or

N-D histogram. In case of sparse histogram the function creates a new bin and sets it to 0,

unless it exists already.

GetMinMaxHistValue

Finds minimum and maximum histogram bins

void cvGetMinMaxHistValue( const CvHistogram* hist,

float* min_value, float* max_value,

int* min_idx=NULL, int* max_idx=NULL );

- hist

- Histogram.

- min_value

- Pointer to the minimum value of the histogram

- max_value

- Pointer to the maximum value of the histogram

- min_idx

- Pointer to the array of coordinates for minimum

- max_idx

- Pointer to the array of coordinates for maximum

The function cvGetMinMaxHistValue finds the minimum and maximum histogram bins and

their positions. Any of output arguments is optional.

Among several extremums with the same value the ones with minimum index (in lexicographical order)

In case of several maximums or minimums the earliest in lexicographical order

extrema locations are returned.

NormalizeHist

Normalizes histogram

void cvNormalizeHist( CvHistogram* hist, double factor );

- hist

- Pointer to the histogram.

- factor

- Normalization factor.

The function cvNormalizeHist normalizes the histogram bins by scaling them,

such that the sum of the bins becomes equal to factor.

ThreshHist

Thresholds histogram

void cvThreshHist( CvHistogram* hist, double threshold );

- hist

- Pointer to the histogram.

- threshold

- Threshold level.

The function cvThreshHist clears histogram bins

that are below the specified threshold.

CompareHist

Compares two dense histograms

double cvCompareHist( const CvHistogram* hist1, const CvHistogram* hist2, int method );

- hist1

- The first dense histogram.

- hist2

- The second dense histogram.

- method

- Comparison method, one of:

- CV_COMP_CORREL

- CV_COMP_CHISQR

- CV_COMP_INTERSECT

- CV_COMP_BHATTACHARYYA

The function cvCompareHist compares two dense histograms using

the specified method as following

(H1 denotes the first histogram, H2 - the second):

Correlation (method=CV_COMP_CORREL):

d(H1,H2)=sumI(H'1(I)•H'2(I))/sqrt(sumI[H'1(I)2]•sumI[H'2(I)2])

where

H'k(I)=Hk(I)-1/N•sumJHk(J) (N=number of histogram bins)

Chi-Square (method=CV_COMP_CHISQR):

d(H1,H2)=sumI[(H1(I)-H2(I))/(H1(I)+H2(I))]

Intersection (method=CV_COMP_INTERSECT):

d(H1,H2)=sumImin(H1(I),H2(I))

Bhattacharyya distance (method=CV_COMP_BHATTACHARYYA):

d(H1,H2)=sqrt(1-sumI(sqrt(H1(I)•H2(I))))

The function returns d(H1,H2) value.

Note: the method CV_COMP_BHATTACHARYYA only works with normalized histograms.

To compare sparse histogram or more general sparse configurations of weighted points,

consider using cvCalcEMD2 function.

CopyHist

Copies histogram

void cvCopyHist( const CvHistogram* src, CvHistogram** dst );

- src

- Source histogram.

- dst

- Pointer to destination histogram.

The function cvCopyHist makes a copy of the histogram. If the second histogram

pointer *dst is NULL, a new histogram of the same size as src is created.

Otherwise, both histograms must have equal types and sizes.

Then the function copies the source histogram bins values to destination histogram and

sets the same bin values ranges as in src.

CalcHist

Calculates histogram of image(s)

void cvCalcHist( IplImage** image, CvHistogram* hist,

int accumulate=0, const CvArr* mask=NULL );

- image

- Source images (though, you may pass CvMat** as well), all are of the same size and type

- hist

- Pointer to the histogram.

- accumulate

- Accumulation flag. If it is set, the histogram is not cleared in the beginning.

This feature allows user to compute a single histogram from several images, or to update the histogram online.

- mask

- The operation mask, determines what pixels of the source images are counted.

The function cvCalcHist calculates the histogram of one or more single-channel images.

The elements of a tuple that is used to increment a histogram bin are taken at the same

location from the corresponding input images.

Sample. Calculating and displaying 2D Hue-Saturation histogram of a color image

#include <cv.h>

#include <highgui.h>

int main( int argc, char** argv )

{

IplImage* src;

if( argc == 2 && (src=cvLoadImage(argv[1], 1))!= 0)

{

IplImage* h_plane = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* s_plane = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* v_plane = cvCreateImage( cvGetSize(src), 8, 1 );

IplImage* planes[] = { h_plane, s_plane };

IplImage* hsv = cvCreateImage( cvGetSize(src), 8, 3 );

int h_bins = 30, s_bins = 32;

int hist_size[] = {h_bins, s_bins};

float h_ranges[] = { 0, 180 }; /* hue varies from 0 (~0°red) to 180 (~360°red again) */

float s_ranges[] = { 0, 255 }; /* saturation varies from 0 (black-gray-white) to 255 (pure spectrum color) */

float* ranges[] = { h_ranges, s_ranges };

int scale = 10;

IplImage* hist_img = cvCreateImage( cvSize(h_bins*scale,s_bins*scale), 8, 3 );

CvHistogram* hist;

float max_value = 0;

int h, s;

cvCvtColor( src, hsv, CV_BGR2HSV );

cvCvtPixToPlane( hsv, h_plane, s_plane, v_plane, 0 );