Object Detection¶

FeatureEvaluator¶

- FeatureEvaluator¶

Base class for computing feature values in cascade classifiers.

class CV_EXPORTS FeatureEvaluator

{

public:

enum { HAAR = 0, LBP = 1 }; // supported feature types

virtual ~FeatureEvaluator(); // destructor

virtual bool read(const FileNode& node);

virtual Ptr<FeatureEvaluator> clone() const;

virtual int getFeatureType() const;

virtual bool setImage(const Mat& img, Size origWinSize);

virtual bool setWindow(Point p);

virtual double calcOrd(int featureIdx) const;

virtual int calcCat(int featureIdx) const;

static Ptr<FeatureEvaluator> create(int type);

};

cv::FeatureEvaluator::read¶

cv::FeatureEvaluator::clone¶

- Ptr<FeatureEvaluator> FeatureEvaluator::clone() const¶

- Returns a full copy of the feature evaluator.

cv::FeatureEvaluator::getFeatureType¶

- int FeatureEvaluator::getFeatureType() const¶

- Returns the feature type (HAAR or LBP for now).

cv::FeatureEvaluator::setImage¶

cv::FeatureEvaluator::setWindow¶

- bool FeatureEvaluator::setWindow(Point p)¶

Sets window in the current image in which the features will be computed (called by ).

Parameter: p – The upper left point of window in which the features will be computed. Size of the window is equal to size of training images.

cv::FeatureEvaluator::calcOrd¶

- double FeatureEvaluator::calcOrd(int featureIdx) const¶

Computes value of an ordered (numerical) feature.

Parameter: featureIdx – Index of feature whose value will be computed.

Returns computed value of ordered feature.

cv::FeatureEvaluator::calcCat¶

- int FeatureEvaluator::calcCat(int featureIdx) const¶

Computes value of a categorical feature.

Parameter: featureIdx – Index of feature whose value will be computed.

Returns computed label of categorical feature, i.e. value from [0,... (number of categories - 1)].

cv::FeatureEvaluator::create¶

- static Ptr<FeatureEvaluator> FeatureEvaluator::create(int type)¶

Constructs feature evaluator.

Parameter: type – Type of features evaluated by cascade (HAAR or LBP for now).

CascadeClassifier¶

- CascadeClassifier¶

The cascade classifier class for object detection.

class CascadeClassifier

{

public:

// structure for storing tree node

struct CV_EXPORTS DTreeNode

{

int featureIdx; // feature index on which is a split

float threshold; // split threshold of ordered features only

int left; // left child index in the tree nodes array

int right; // right child index in the tree nodes array

};

// structure for storing desision tree

struct CV_EXPORTS DTree

{

int nodeCount; // nodes count

};

// structure for storing cascade stage (BOOST only for now)

struct CV_EXPORTS Stage

{

int first; // first tree index in tree array

int ntrees; // number of trees

float threshold; // treshold of stage sum

};

enum { BOOST = 0 }; // supported stage types

// mode of detection (see parameter flags in function HaarDetectObjects)

enum { DO_CANNY_PRUNING = CV_HAAR_DO_CANNY_PRUNING,

SCALE_IMAGE = CV_HAAR_SCALE_IMAGE,

FIND_BIGGEST_OBJECT = CV_HAAR_FIND_BIGGEST_OBJECT,

DO_ROUGH_SEARCH = CV_HAAR_DO_ROUGH_SEARCH };

CascadeClassifier(); // default constructor

CascadeClassifier(const string& filename);

~CascadeClassifier(); // destructor

bool empty() const;

bool load(const string& filename);

bool read(const FileNode& node);

void detectMultiScale( const Mat& image, vector<Rect>& objects,

double scaleFactor=1.1, int minNeighbors=3,

int flags=0, Size minSize=Size());

bool setImage( Ptr<FeatureEvaluator>&, const Mat& );

int runAt( Ptr<FeatureEvaluator>&, Point );

bool is_stump_based; // true, if the trees are stumps

int stageType; // stage type (BOOST only for now)

int featureType; // feature type (HAAR or LBP for now)

int ncategories; // number of categories (for categorical features only)

Size origWinSize; // size of training images

vector<Stage> stages; // vector of stages (BOOST for now)

vector<DTree> classifiers; // vector of decision trees

vector<DTreeNode> nodes; // vector of tree nodes

vector<float> leaves; // vector of leaf values

vector<int> subsets; // subsets of split by categorical feature

Ptr<FeatureEvaluator> feval; // pointer to feature evaluator

Ptr<CvHaarClassifierCascade> oldCascade; // pointer to old cascade

};

cv::CascadeClassifier::CascadeClassifier¶

- CascadeClassifier::CascadeClassifier(const string& filename)¶

Loads the classifier from file.

Parameter: filename – Name of file from which classifier will be load.

cv::CascadeClassifier::empty¶

- bool CascadeClassifier::empty() const¶

- Checks if the classifier has been loaded or not.

cv::CascadeClassifier::load¶

- bool CascadeClassifier::load(const string& filename)¶

Loads the classifier from file. The previous content is destroyed.

Parameter: filename – Name of file from which classifier will be load. File may contain as old haar classifier (trained by haartraining application) or new cascade classifier (trained traincascade application).

cv::CascadeClassifier::read¶

cv::CascadeClassifier::detectMultiScale¶

- void CascadeClassifier::detectMultiScale(const Mat& image, vector<Rect>& objects, double scaleFactor=1.1, int minNeighbors=3, int flags=0, Size minSize=Size())¶

Detects objects of different sizes in the input image. The detected objects are returned as a list of rectangles.

Parameters: - image – Matrix of type CV_8U containing the image in which to detect objects.

- objects – Vector of rectangles such that each rectangle contains the detected object.

- scaleFactor – Specifies how much the image size is reduced at each image scale.

- minNeighbors – Speficifes how many neighbors should each candiate rectangle have to retain it.

- flags – This parameter is not used for new cascade and have the same meaning for old cascade as in function cvHaarDetectObjects.

- minSize – The minimum possible object size. Objects smaller than that are ignored.

cv::CascadeClassifier::setImage¶

- bool CascadeClassifier::setImage(Ptr<FeatureEvaluator>& feval, const Mat& image)¶

Sets the image for detection (called by detectMultiScale at each image level).

Parameters: - feval – Pointer to feature evaluator which is used for computing features.

- image – Matrix of type CV_8UC1 containing the image in which to compute the features.

cv::CascadeClassifier::runAt¶

- int CascadeClassifier::runAt(Ptr<FeatureEvaluator>& feval, Point pt)¶

Runs the detector at the specified point (the image that the detector is working with should be set by setImage).

Parameters: - feval – Feature evaluator which is used for computing features.

- pt – The upper left point of window in which the features will be computed. Size of the window is equal to size of training images.

Returns: 1 - if cascade classifier detects object in the given location. -si - otherwise. si is an index of stage which first predicted that given window is a background image.

cv::groupRectangles¶

- void groupRectangles(vector<Rect>& rectList, int groupThreshold, double eps=0.2)¶

Groups the object candidate rectangles

Parameters: - rectList – The input/output vector of rectangles. On output there will be retained and grouped rectangles

- groupThreshold – The minimum possible number of rectangles, minus 1, in a group of rectangles to retain it.

- eps – The relative difference between sides of the rectangles to merge them into a group

The function is a wrapper for a generic function

partition()

. It clusters all the input rectangles using the rectangle equivalence criteria, that combines rectangles that have similar sizes and similar locations (the similarity is defined by

eps

). When

eps=0

, no clustering is done at all. If

, all the rectangles will be put in one cluster. Then, the small clusters, containing less than or equal to

groupThreshold

rectangles, will be rejected. In each other cluster the average rectangle will be computed and put into the output rectangle list.

, all the rectangles will be put in one cluster. Then, the small clusters, containing less than or equal to

groupThreshold

rectangles, will be rejected. In each other cluster the average rectangle will be computed and put into the output rectangle list.

cv::matchTemplate¶

- void matchTemplate(const Mat& image, const Mat& templ, Mat& result, int method)¶

Compares a template against overlapped image regions.

Parameters: - image – Image where the search is running; should be 8-bit or 32-bit floating-point

- templ – Searched template; must be not greater than the source image and have the same data type

- result – A map of comparison results; will be single-channel 32-bit floating-point.

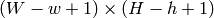

If image is

and templ is

and templ is  then result will be

then result will be

- method – Specifies the comparison method (see below)

The function slides through

image

, compares the

overlapped patches of size

against

templ

using the specified method and stores the comparison results to

result

. Here are the formulas for the available comparison

methods (

against

templ

using the specified method and stores the comparison results to

result

. Here are the formulas for the available comparison

methods (

denotes

image

,

denotes

image

,

template

,

template

,

result

). The summation is done over template and/or the

image patch:

result

). The summation is done over template and/or the

image patch:

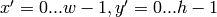

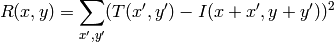

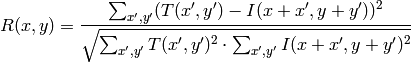

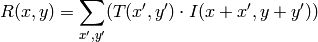

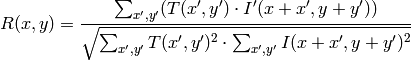

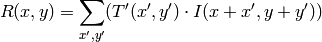

method=CV_TM_SQDIFF

method=CV_TM_SQDIFF_NORMED

method=CV_TM_CCORR

method=CV_TM_CCORR_NORMED

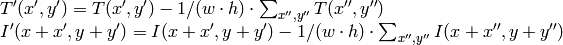

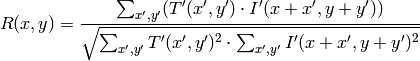

method=CV_TM_CCOEFF

where

method=CV_TM_CCOEFF_NORMED

After the function finishes the comparison, the best matches can be found as global minimums (when CV_TM_SQDIFF was used) or maximums (when CV_TM_CCORR or CV_TM_CCOEFF was used) using the minMaxLoc() function. In the case of a color image, template summation in the numerator and each sum in the denominator is done over all of the channels (and separate mean values are used for each channel). That is, the function can take a color template and a color image; the result will still be a single-channel image, which is easier to analyze.

Help and Feedback

You did not find what you were looking for?- Try the FAQ.

- Ask a question in the user group/mailing list.

- If you think something is missing or wrong in the documentation, please file a bug report.